LLM Farcaster Frame

2024-02-02

View on Warpcast

The LLM Frame

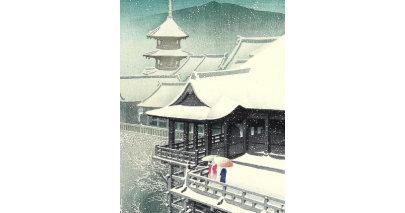

Frames launched on Jan 26th and brought parabolic daily active user growth to the Farcaster protocol. At first, frames allowed images and buttons which would let the user program an endpoint to receive POST requests and handle button clicks. A week later, frames supported a text input field. Immediately, I started thinking of taking the text a user types and running it through an LLM to do something. What could I do? I had been posting a lot about art and history, and I came across a beautiful print by Hasui Kawase. I thought it would be neat to have the frame prompt the user for their thoughts on the piece, and respond with an LLM one-liner.

How Could I Do That?

The first frame with the text input could easily be setup with the Farcaster opengraph tags. Just check the frame docs for how to do this. All I needed was the tag for the input field, image and the API URL for the POST request that would trigger on button click. Once you have the first frame, then you need the API route to process the text input and return a response. The fun part.

The API Route

The API route is a simple Next.js API route. It takes the input text and sends it to OpenAI's GPT-3.5-turbo model with a prompt to poke fun at the user's input.

const { untrustedData } = req.body;

const inputText = untrustedData.inputText;

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

const response = await openai.chat.completions.create({

messages: [

{

role: "system",

content: `The user is looking at an art piece by Hasui Kawase and this is their thoughts. Give a funny one-liner response, poking fun at them: ${inputText}`,

},

],

model: "gpt-3.5-turbo",

});

const imgText = response.choices[0].message.content.trim();

export async function createTextImage(text) {

const ratio = 1.9;

const width = 800;

const height = width / ratio;

const canvas = createCanvas(width, height);

const context = canvas.getContext("2d");

context.fillStyle = "#fff";

context.fillRect(0, 0, width, height);

context.fillStyle = "#000";

context.font = "30px Arial";

context.textAlign = "left";

context.textBaseline = "top";

const lines = [];

const words = text.split(" ");

let currentLine = words[0];

for (let i = 1; i < words.length; i++) {

const word = words[i];

const width = context.measureText(currentLine + " " + word).width;

if (width < canvas.width) {

currentLine += " " + word;

} else {

lines.push(currentLine);

currentLine = word;

}

}

lines.push(currentLine);

const lineHeight = 30;

let y = (canvas.height - lines.length * lineHeight) / 2;

for (const line of lines) {

context.fillText(line, 10, y);

y += lineHeight;

}

const buffer = canvas.toBuffer("image/png");

const base64Image = buffer.toString("base64");

return base64Image;

}